BiasExplained: Pushing Algorithmic Fairness with Models and Experiments

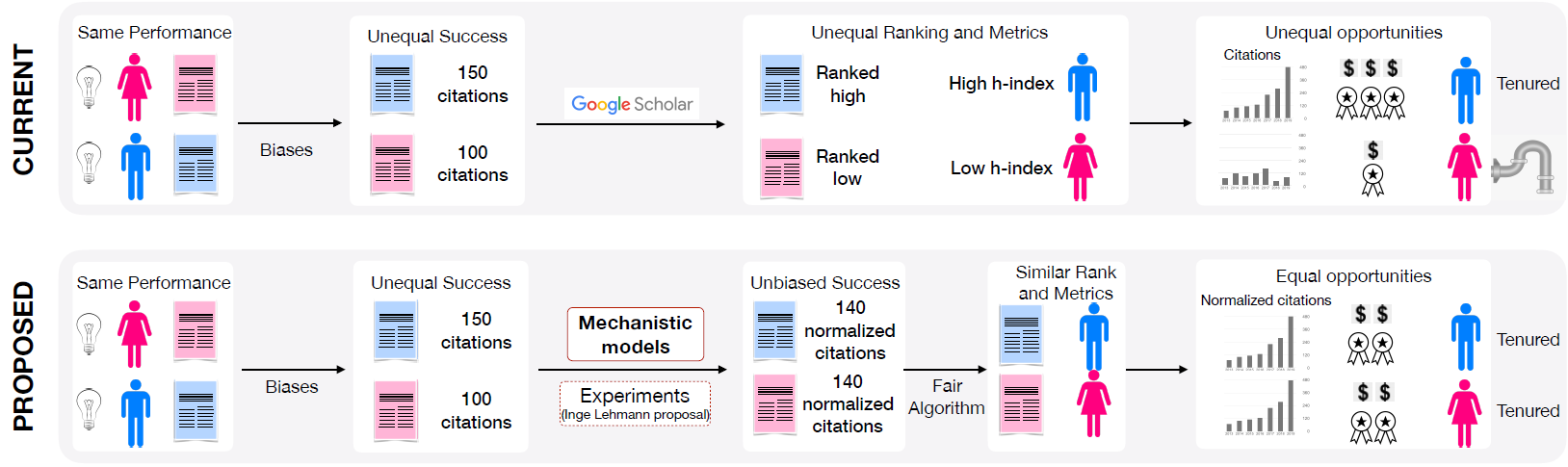

The project goal is to first measure experimentally how bias emerges, then to use these findings to develop mathematical models, explaining how bias amplifies inequalities and distorts our assessment of quality through existing metrics and algorithms, especially in science. With these insights I will develop fair algorithms and testable applications.

Data about popularity and impact are at the core of most measures and algorithms in society. Products are recommended based on user ratings. Trending news are featured on social media. In science, citation numbers are widely used to assess scientists and form the basis of recommendation engines like Google Scholar. However, such impact metrics reflect only a community’s reaction to a performance; they are not a measure of the performance itself. Because of their social nature, these metrics have one crucial issue: they build on biased data produced by people. For example, given equal performance, black/female researchers are less cited than white/male ones due to discriminatory citation cultures.

Because many crucial decisions use the output of metrics and rankings, the project asks:

- Given equal performance, what are the mathematical laws governing the evolution of inequality?

- What is the effect of the impact-based biases on the output of metrics and algorithms?

To tackle these questions, the project proposes to break new grounds by combining mechanistic models with controlled experiments, applied to large-scale datasets. This will push new frontiers in algorithmic fairness. The project will:

1. run unprecedented experiments to measure bias;

2. develop new models to understand inequality;

3. improve the fairness of algorithms through de-biased impact measures.

As an application, the project will assess the biases in Danish research. If we lack rigorous tools to understand how biases distort success, we will never achieve a data science that informs our decisions in a fair way.

Because many crucial decisions use the output of metrics and rankings, the project asks:

- Given equal performance, what are the mathematical laws governing the evolution of inequality?

- What is the effect of the impact-based biases on the output of metrics and algorithms?

Yanmeng Xing, Ying Fan, Roberta Sinatra, An Zeng. Academic mentees succeed in big groups, but thrive in small groups. 2022. arXiv preprint. Doi: https://doi.org/10.48550/arXiv.2208.05304

Researchers

| Name | Title | Phone |

|---|

Funded by:

Project: Bias Explained: Pushing Algorithmic Fairness with Models and Experiments

Period: 2021 - 2026

Contact

Principal Investigator: Roberta Sinatra, robertasinatra@sodas.ku.dk

Postdoc: Sandro Sousa, ssou@itu.dk